At Criteo, we have a team inside the R&D department dedicated to improving the build process. Our goal is to allow developers to push their code in production quickly and safely. We think that one way to achieve this is to provide early feedback to developers, during the development process, so that they feel confident that they are not going to break something. As adventurous as we are, we don’t feel production is an appropriate place to find out we might have goofed. We want to give feedback on code commits before they go through the integration pipeline. The first two steps we introduced for this were:

This ensured that the quality of every commit is verified both by fellow coders and by automated tests, even before the commits are merged into the codebase.

This was good, but we still felt we could do better. We wanted to give a richer feedback to developers using static code analysis tools. Static analysis is a set of tools and processes to find bugs, vulnerabilities, design issues, inconsistencies and more, in source code. The standard way to achieve this is to periodically run a tool on the codebase and publish the results on nice dashboards in which we can track our projects’ quality. This is useful, but the feedback is provided on code that has been merged possibly hours ago. So we decided to run the static code analysis on every commit, and publish the detected issues as comments directly in Gerrit, as human reviewers do. This way, the static analysis report is displayed at pre-merge time, in a handy place where a conversation about the code is already happening.

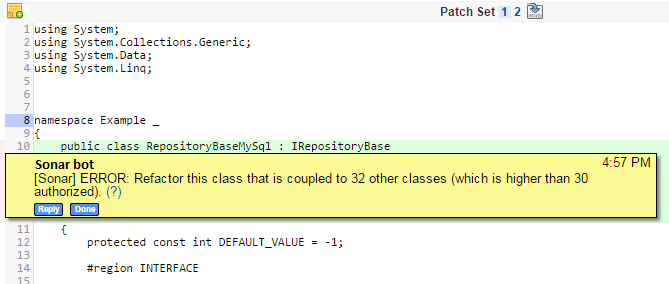

In the following screenshot you can see an issue detected by code analysis, reported directly in the review screen:

To sum up, we wanted:

- To run a static code analysis on every commit, with direct, actionable comments posted in code reviews.

- To also run a periodic analysis on the whole codebase, with persisted results and dashboards to track quality over time, browse issues, display statistics, etc.

Technical details

We setup this process for the C# part of our codebase, using several available tools:

- JetBrain Resharper, Microsoft Fxcop and NDepend are used to analyze C#.

- SonarQube is used to store the configuration of the above tools, run them, store the results, and display dashboards.

- Sputnik (a java tool) is used to link SonarQube and Gerrit.

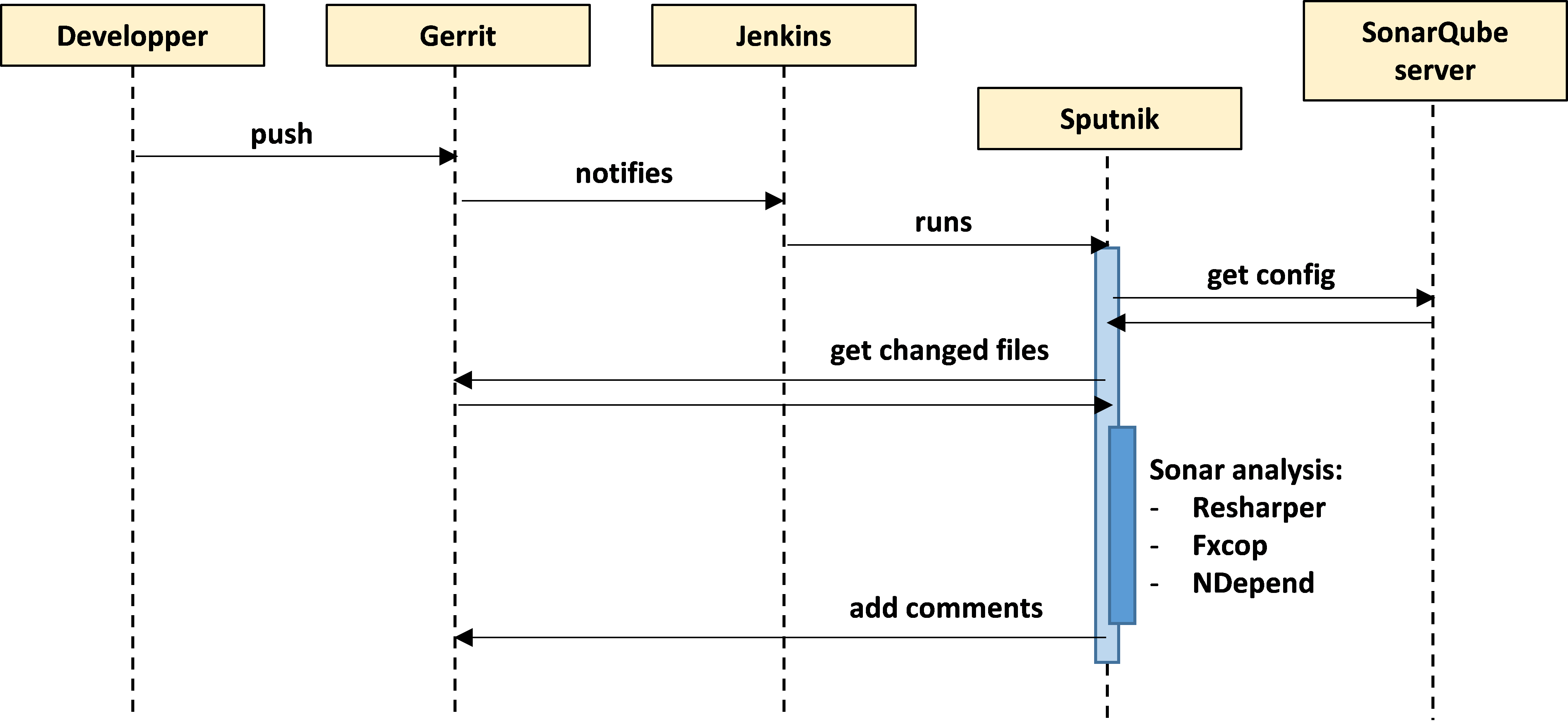

We have two jobs in Jenkins:

- One that is run on a regular basis. It runs a full SonarQube analysis on all our codebase.

- One that is triggered whenever a review is uploaded in Gerrit. It runs an incremental SonarQube analysis on the modified files of the patchset. The analysis is run through Sputnik, which uploads the analysis report to Gerrit. The incremental analysis is a feature of SonarQube that enables to report only issues that were not in the last full analysis. This way, we don’t flood the code reviews with old issues already present in the code: all comments we display are caused by the change (This supposes that the full analysis runs quite often).

The following sequence diagram helps to understand the workflow of the second job:

To setup this workflow, we integrated different existing software pieces, but we also had to do some development:

- We added the support of SonarQube in Sputnik (See here). Initially, Sputnik was able to run some tools like PMD and Findbugs, and publish results in reviews, but it was not able to run SonarQube.

- We added the support of NDepend to SonarQube by writing a plugin.

As we try to do whenever we can, both of these developments have been open sourced or contributed upstream.

Rollout

We took special care in the rollout of the process, to be sure to have a good reception within the R&D department. Because we like consistency, we wanted to have a set of rules common to all projects, without exceptions. We took several steps in order to converge to a set of rules that satisfies most developers.

We did a first configuration of quality profiles in SonarQube (Some rules are duplicated between Resharper, FxCop and NDepend).

Then, we activated the system for a few beta tester teams, during a significant period of time, allowing the teams to tune the list of quality rules. We like the idea of the developers using the rules they themselves have created, rather than anything imposed from outside.

Finally, after having presented it to the whole R&D, we activated the system for everybody. We provided a poll system allowing developers to vote for or against rules. We monitored that poll and removed rules for which the feedback was overwhelmingly negative (generally because they were generating too many false positives).

Conclusion

Our C# developers now have three sources of feedback on their work code before it gets integrated into the codebase: unit tests, code reviews and static code analysis. As future work, we intend to enrich the rules used to verify the code: this is very easy with NDepend, that allows to create custom rules using C# LINQ code.

Patrick Bruneton, Software Developer

-

CriteoLabs

Our lovely Community Manager / Event Manager is updating you about what's happening at Criteo Labs.

See DevOps Engineer roles