A tech conference in the Alps

In case you missed it, the 2019 edition of the SnowCamp conference took place this month in Grenoble, in sync with the arrival of the first snowflakes of the year on the city.

This conference was all about the latest development technologies and best practices, and attracted quite a lot of people: 450 persons in total, with the presale tickets being sold out in 40 minutes!

Of course, as a tech-focused company with an office in Grenoble, Criteo had to be there so here we are:

Besides our booth presence, we were there to share some experience from our web apps teams via a talk on State Management (the slides are here if you missed it

Selected picks from some of the best talks

From Microservices to Migroservices (slides)

François Teychene presented the main issues coming with microservices and why we generally end up with what he called migroservices (a multitude of not-so-small services). Here are my main takeaways.

Why Microservices ?

Microservices are generally used to resolve following monolith issues:

- Complexity: it’s hard to embrace all the features due to the number of functionalities and the technical debt that exist in every company, and it’s difficult to update/migrate code due to the number of projects, files and lines of code that needs to be updated at the same time.

- Scalability: when performance issues are coming, adding hardware or duplicating server is a first solution but it doesn’t help to improve specific modules of the monolith.

Microservices seem magic but… end up not being as simple as it seems.

Microservices to reduce complexity ?

Let’s consider these 3 types of complexity:

- Business complexity:

complexity of the business needs - Necessary technical complexity:

complexity of the components you need to build your service (servers, DB, network…) - Accidental complexity: usually known as technical debt

Business complexity

Microservices can help to reduce business complexity by splitting it in smaller pieces. But beware: a split with a technical component perspective usually does not reduce this complexity.

Let’s consider the layered monolith as if it was

Technical complexity

Microservices will increase technical complexity a lot: having network between functions means that each call can take time and some will fail.

Failure needs to be part of the design of all pieces (not the case in a monolith, as a function will always return and at worst can throw an exception). Furthermore, having a transaction across multiple services is much more complex than a DB transaction in a monolith. Lastly, error recovery with plan B in case of failure is a better option than passing an error 500 to the top (else, stay with a monolith).

How a service calls another is not just a matter of format (JSON…). It’s also the actions definition — what actions a service provide on a model — and how they find each other and exchange: hard coded URLs is quickly not manageable with many services, each one having many instances. Service discovery quickly becomes necessary.

Automation is not an option anymore:

Accidental complexity

Microservices might reduce the cost of technical debt killing as smaller parts of code are involved.

On the other hand, beware of the debugging tools: aggregation of all the information needs to be added. It’s the key to be able to debug, as logs investigation can’t be done on multiple files on multiple servers and monitoring now concerns dozens of services each on multiple instances.

Conclusion

Before starting, ask yourself:

“If you can’t build a monolith, what makes you think microservices are the answer?”

The main risk you are facing is that if your monolith is a big bag of mud, then with microservices it will likely become just a distributed big bag of mud with a much higher complexity.

Your main goal should be to decouple the code as it’s the real complexity. Microservices will not resolve it magically, but you can use them. If you go this way, here are the main advices:

- Build small and focused services with a bounded context (business), loosely coupled with other services and with clear team ownership

Protocol between services should be portable- Automation is a requirement,

tthough it has a high entry cost

(Micro|Migro)services at Criteo ?

Automation, aggregated logs & monitoring are our daily life at Criteo and we are used to manage this technical complexity, so this talk was really echoing to us.

Splitting services is indeed really a challenge. Finding the right place to cut and come up with bounded context is complex, especially when it implies changing teams organizations and ownership. We are often tempted to cut following technical or team bounds instead of business ones.

The second huge challenge we face is the impossibility to have from the beginning microservices not including libraries of other projects (historically a few of them provide services). We have to find the right size for our services:

- Too big means coupling of multiple teams for releases (and rollback) and it slows down rollout of changes in production.

- Too small means having numerous versions of the services, each using different versions of the same libraries which can quickly become a nightmare in production. Furthermore, changing a contract inside a monolith and releasing it is much faster than updating a contract between multiple services with progressive releases.

Last (smaller) challenge is indeed how to manage transactions (and their rollback and half-completeness) across multiple services.

Despite these challenges, we work to decouple our business needs and have modules with business meaning, which is by no means an easy task! Microservices then end up being the cherry on the cake, but not our primary goal 😉

How can we lose our feature overweight (slides in French )

Estelle Landry kept us entertained for 45 minutes with a really fun but still serious presentation on how we could reduce the complexity of our apps, using a parallel with

Not a trivial problem

First solution that usually comes up is to rewrite everything from scratch, but in general we cannot afford that choice and we would like to have solutions to avoid getting in this situation again.

Then the second popular option is to take some time to tackle the technical debt. But are we sure we are tackling the right problem? Indeed, if we dig into it there are different kinds of complexity: essential complexity and accidentalcomplexity. The former is the minimal complexity to solve a problem, while the latter is created by the technical choices to solve it. When adding features, both complexities increase and you get stuck when your essential complexity gets too high, it’s the symptom of the feature overload.

How can we fix it? Solution #1 could be to remove features. It’s not a simple decision to take, not easy to put in practice as it requires communicating with its few users and in the end it’s a lot of energy for not having something anymore. We are attached to what we create and we are having a hard time deleting stuff. Are we going to congratulate developers who actually remove features? Aren’t they supposed to add them?

Solution #2 is to let the application die and restart from scratch. When you have the means to do it it can be a successful idea: it happened to FogBugz team who ended up creating Trello! But still not a solution that would be satisfying to all companies.

What other alternatives do we have?

As it seems to be a dead end, Estelle tried to find analogies in other fields and found one in human behavior: we are all hyperconnected, our brain is always hyper active. What if our applications were like our brain, overloaded?

She followed the same analogy and tried to use human psychology tips for mental load to help our app and use that to share a full set of tools with us.

1. Start with a blank page

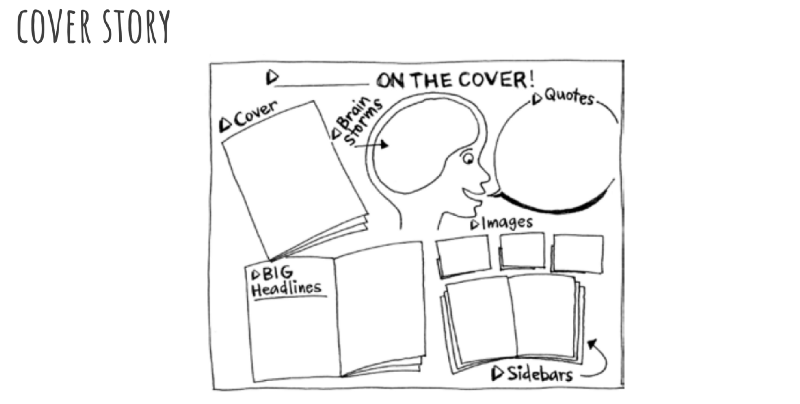

If your app was doing the cover of the New York Times, what would this page look like? Estelle introduced the Cover Story game where your team can brainstorm on what would appear on that page: goal, headlines, quotes, pictures that could represent your app. By building your front page of a newspaper, you actually spot the key assets of your app and it helps you see what are the most important pieces.

You can also define the purpose of your app by doing a persona. Personas are a common support to conception and communication usually used to define the main users of your app. Here you can define your app as a persona. It helps you understand its history, goals, personality and how users perceive it as it was someone. It will help you keep track of what your app is and what you want it to be. It is important that this is shared: later on anyone in your team can ask herself, is this feature aligned with our long term vision?

2. Add only activities that you really like

What does it mean for our app? Keep only features that are relevant, but how to spot them? For that Estelle introduced another tool: card sorting. Brainstorm with your team and stakeholders to list all the features that come to your mind, even the craziest ones (have a unicorn with wings) and then you can start sorting them by big topics (functional areas for instance). Do not forget to get a garbage category as you need to keep the most important (and realistic) ones! Apply dot voting — everyone has a number of dots that can be spread among the various features to help score the most popular ideas — and then tada 🎉, you have a list of prioritized features!

3. Get help from an expert

Who can play the role of the psychologist for our app? Our UX Designer! Estelle introduced a few additional games you can play with your users: the 5 seconds test, sentence completion or Bono’s 6 hats to ensure your app stays on track on what you defined earlier. Getting to understand how your users see your app is key to ensure it actually matches your newspaper cover and your persona.

4. Avoid any future overload

Make sure to use analytics solutions so you can keep a close eye on your feature usage and user behavior. This can give you useful metrics to spot improvements or reduce feature overload early.

Conclusion

No miracle recipes here but good practices to put in place. This can be summarized in 4 steps: analysis, definition/ideation, prototypes/tests and feedback (this is quite similar to the Design Thinking phases for those who are familiar with them). Most important, it is work of EVERY day and needs to be a mindset in your team: having simplification in mind is complementary and similar to UX.

I think this rings bells to many developers and it does for us in Criteo as well: our apps have a lot of features and we would benefit from applying Estelle’s advices to make them a bit slimmer, reduce their complexity and have a faster delivery pace.

Matthieu Lux presented here the result of one of his personal experiments: developing a web app — his own version of the famous 2048 game — using no external code (😱!), latest standards whenever possible as long as it’s working at least on two different browsers.

Challenge 1: bundling

The first challenge is to load the app efficiently in the browser, which is usually optimised by bundling all JS files into one using tools such as Webpack, but we cannot use this approach here (no external code allowed!). To overcome this, HTTP/2 was used instead to reduce the overhead of loading a bunch of different files. More precisely, HTTP/2 Server Push was used with a Node.js server so only the first client request is needed to retrieve all the app files in one go. Neat!

Challenge 2: making the app

Is Vanilla JS really enough? Using the latest JS standard (ES2017) to benefit from ES modules, classes, async functions, spread/destructuring operators, template strings and others, clean and readable code can now be used within browsers without the need for Babel (except for supporting IE 11, but obviously Chrome and Firefox were the target here). With the help of the Custom Element and Shadow DOM APIs, it’s now possible to make a proper component-based app without a framework.

Challenge 3: animations

Without the nice sliding animations, 2048 gets very hard to play, so the naive destroy /rebuild all DOM nodes approach cannot work here. How to make efficient DOM updates then? Matthieu went a bit overboard here and wrote his own Virtual DOM implementation, based on this article.

Conclusion

All in all, wrapping up the components, state and virtual DOM helpers, he ended up creating his own frontend framework! JS have come from a long way and yes using Vanilla JS is now enough to make an app in 2019, but as long as the need for efficiency and wide browser compatibility are involved, frameworks will be required to fill the gaps.

You can check out the final code on this github repository.

Unconference wrap-up

This 2019 edition was really great, with a nice organisation, good food and interesting talks with real technical depth. Still, the best part of SnowCamp is the Saturday infamous Unconference day where we join again for skiing (or snowboarding, as far as I’m concerned) together at Chamrousse ski resort. With the snow that came just in time for the conference and a clear sunny day, it was the best closure you can expect after these 3 exciting days.

I would like to give my thanks to the organisers for this edition, if there’s only one thing I would love to see in the next one, it is talk recording so we can catch up to the great talks we could not attend to.

See you there next year! 👋

Thanks to Vincent Michau and Veronique Loizeau who contributed to this article with the reports of microservices and feature overweight talks, and everyone who helped with the proofreading.

While you are there, just a few words to add that we are recruiting! If you like the Grenoble landscape (and its nearby ski stations) in particular, we are looking for Full-stack and Machine Learning Engineers, so come say hi if you are searching for new challenges!

-

CriteoLabs

Our lovely Community Manager / Event Manager is updating you about what's happening at Criteo Labs.

See DevOps Engineer roles