Introduction

Machine learning engineers (or ENG-ML in Criteo’s slang) are expert in the development of data processing pipelines. Our role at Criteo is to design these pipelines, to drive their implementation, and to push them in production. This versatile role requires strong skills in applied research but also in computer science and data science. As complex algorithm designers, it is expected from us to acknowledge and deeply understand the theory behind these methods. Using this knowledge, we must implement code complying with all good engineering practices such as robustness and maintainability. We also have to take into account the wider software ecosystem where our software is deployed: using the most relevant technologies, sizing the CPU and memory consumption and asking for extra resources if needed. We define the SLAs with regard to the other Criteo services that our code is interacting with.

At Criteo, the role of a ML engineer can be very different depending on the needs of the team. In this blog we present to you the typical tasks of ML engineers at Criteo, how they translate into tasks at our Prediction team, responsible for machine learning optimization algorithms, and our Recommendation team, working on product recommendation.

Bringing ideas to solutions

Machine Learning is a domain that evolves very quickly, in particular regarding the recent explosion of work in the area deep neural networks. It is part of our role as ML engineers @ Criteo to watch these evolutions and evaluate their value and feasibility in the context of our current teams’ projects. For this purpose, we organize reading groups amongst teams, and cross teams, where we discuss the latest papers and toolboxes. At Criteo, we are lucky to work with very passionate ML people and the feedback of other ML engineers and researchers help us determine the fit with the problems we are working on, their integration cost in our ML infrastructure (referred to in Criteo as “The Engine”), and the potential uplift that they could bring for our clients. We also discuss a lot on dedicated internal channels, allowing anyone to express their opinion.

Once a project is chosen, we follow an agile methodology to iterate fast. As ML engineers, we have daily two-way exchanges with the research team to refine the idea and end up with a solution that can be applied to Criteo’s problems.

From Proof Of Concept to Prod

Once we are convinced that a method can be valuable for our use-cases, we establish a plan to integrate the new method into our existing system. It starts with a proof of concept that demonstrates the feasability of the solution in production. This implies dealing with the data fetching system in place, distributing the computation on several machines, and making the result available to the rest of The Engine. It also means making the code robust, easy to monitor, and safe to be used on prod: it’s up to the ML engineer to design unit tests and non-regression tests, as well as designing any monitoring needed. We also do further research on more complex models to analyse the risks, limits and impact of using such models online. Making a new method prod-grade also includes ensuring that it does better than the current model. For this task, we design offline metrics tailored to the task, so we can validate our hypotheses before rolling to product, and we design and implement an A/B test protocol so we can compare the results of the new code against a test population.

The Prediction ML team

Team Objectives: Optimization is the main focus of our team. Our objective is to optimise resource usage and the learning time whilst decreasing the prediction error. This can be done by either challenging the current code implementation and trying to push it to its limits or by implementing different losses / optimizers (which can be generalized or custom).

Daily challenges: What I like the most about the ML team work is to adapt the theory: where our math knowledge and our capacity to adapt to the code is challenged. We need to know exactly what approximation would be efficient, what are the limits, specifics and features of the libraries, code, and language, so we understand what can be done and what would be efficient to try.

Monitoring and prod code promotion: Adding features or enhancing the code obviously implies changing the expected behaviour. Putting new code in production is always risky because even a small change in code can impact a lot of other parts. For instance, a new parallelization scheme would mean that the data would not be learned in the same order, and the expected prediction on some non-regression tests would change. Part of our work is to ensure that all the code changes put in prod perform as expected and arevsafe to use.

Academia and knowledge sharing: Another part of our work is communication and knowledge sharing, as we need to present to our colleagues the different results and methods that we have tried. We also present some ML basics accessible for all Criteo. We are encouraged to spend some of our work time taking courses, reading articles, and discussing methods inside of the team or with researchers.

Side project: When we have an idea that we believe is worth trying, we do a PoC (proof of concept) to get some first results out of the door fast. If we have positive results we can promote it as a team project

The Recommendation ML team

Team Objectives: The Recommendation (Reco)team is in charge of filling the advertising banners with products personalized based on the navigation history of our end users. Our main challenge is to be able to deliver an accurate recommendation in less than 20ms based on product catalogs, containing many billions of products, that change very frequently.

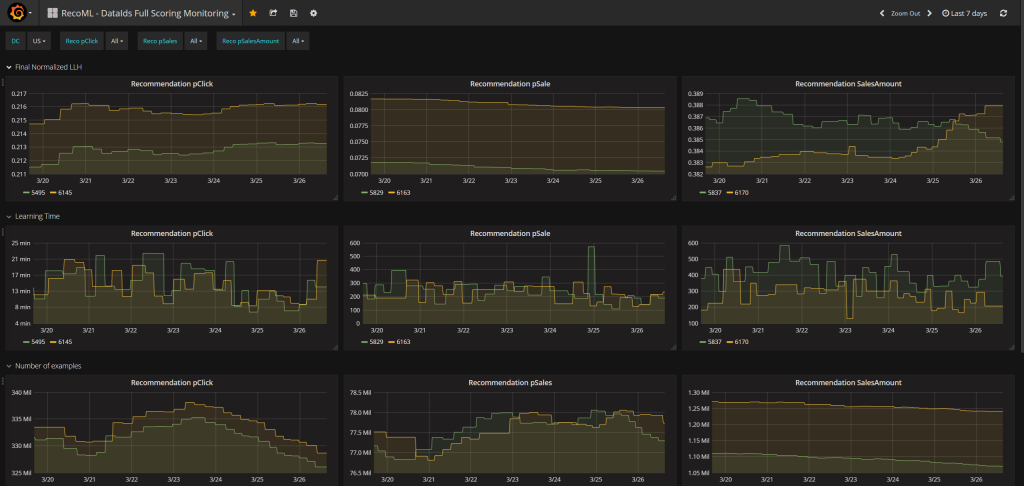

Challenges: Being part of Reco requires a lot of flexibility due to the multi-tasking aspect of the team. Our work requires a specific infrastructure – to be able to access a retailer’s catalog at prediction time – and a validation feedback framework, because we are not only interested in numbers but in measuring how the users react to our recommendation. Consequently, as machine learning engineers, our role mainly consists in improving recommendation by incorporating new features and trying out new algorithms, and at the same time, taking care of the platform, by monitoring the infrastructure and addressing any concerns regarding production.

Academia and knowledge sharing: In order to follow the latest advancements in recommender systems, we have regular meetings to share knowledge about our latest readings. I also take part into conferences and organize the RecSys FR meetups. These initiatives, highly valued at Criteo, allow me to improve myself while bringing value to the company. I also work with researchers on a daily basis. For example, I had the occasion to help researchers for the design of new offline experiment metrics, see our paper published at WSDM 2018.

History of an ML Driven Project

Bringing prod2vec in production: Prod2Vec is a project that was initiated by the Reco research team. Its deployment in our recommendation framework was typical of the work we do. The ENG-ML engineer is deeply involved at each step of the process, starting with establishing a full plan from the first experiments to the final roll out. Here are the steps followed for this project and the role of the ENG-ML in each of them:

Battle plan design: Prod2Vec consists in creating embeddings for the products in our catalog and can be used as a simple ranking feature or as a complete recommendation component by itself. The ENG-ML prioritises the experiments, determining how to test the value of each proposition without investing too much in development – we do not want to redesign the whole system for a simple feature.

The ENG-ML then prepares everything for the experiment. It involves partnering with a product manager if the explicit consent of our partner is required, and also with an infra engineer to ensure we have the required resources. Making embeddings available for our online system required some additional memory so, in the case of Prod2Vec, we asked for a small pool of servers and got them the day after thanks to our infra team.

We then ran tests in our offline experiment framework to get the first estimations of the value of the feature, and tuned the cases where it was proven to be most beneficial. Our role here is to get numbers and if they are good enough, advocate for the project with colleagues in order to validate the online test.

We then go for the online A/B test next. Here we helped design the experiment and determined which metrics we needed to monitor. Again, we advocated for the project in a committee to decide whether it should go online or not.

Finally, the project goes to production! At this point, we have to make the system prod-grade, which may imply deeper refactoring of the codes. These changes are driven by the ENG-ML who will ask for resources from other teams if needed. In the Prod2Vec case, all the checks done manually on the embeddings had to be prodified, and we needed to adapt our system to allow anybody in Criteo to test the enhancement of this system. We also had to set up the monitoring for this new source of data, ensuring the quality of the data over time.

Wrapping up

ENG-ML is a very versatile role that can take different forms depending on the team. The common factor is the operational knowledge both in machine learning theory and software engineering. Working at Criteo with such passionate and talented people is a real pleasure and we are never bored given the ongoing challenges. Do not hesitate to contact us to know more!

Written by :

|

|

| Syrine Krichene Software Engineer |

Alexandre Abraham Software Engineer |