Criteo uses a lot of Scala in its code-base. This originally started by experimentations with big data jobs and data science scripts, but quickly it became evident that Scala would be very useful for application development too.

At the beginning, like in most companies, it was first used as a “shorter Java”. But eventually, as more and more teams launched Scala projects, the practice of Scala as a real FP language improved and we started seeing the promise of more robust and safer programs. Today a large chunk of our new software projects use Scala and advanced functional programming libraries such as as cats, doobie, circe or parser combinators.

At the beginning, like in most companies, it was first used as a “shorter Java”. But eventually, as more and more teams launched Scala projects, the practice of Scala as a real FP language improved and we started seeing the promise of more robust and safer programs. Today a large chunk of our new software projects use Scala and advanced functional programming libraries such as as cats, doobie, circe or parser combinators.

Introducing truly functional code in a large software company like Criteo is not without challenge however. Let’s talk about what’s worked well as well as where the dragons lie.

Coming from an imperative programming background

From the beginning Criteo was mostly a .NET shop where the main programming language was C#. It goes without saying that Criteo developers are very good, but as in most big tech companies they have mainly an imperative programming background and come from many years of Java, C# or C++ pratice.

Indeed, our interview process is optimised to find the best programmers and we ask many questions to assert that the candidates are able to find very performant solutions to difficult algorithmic problems.

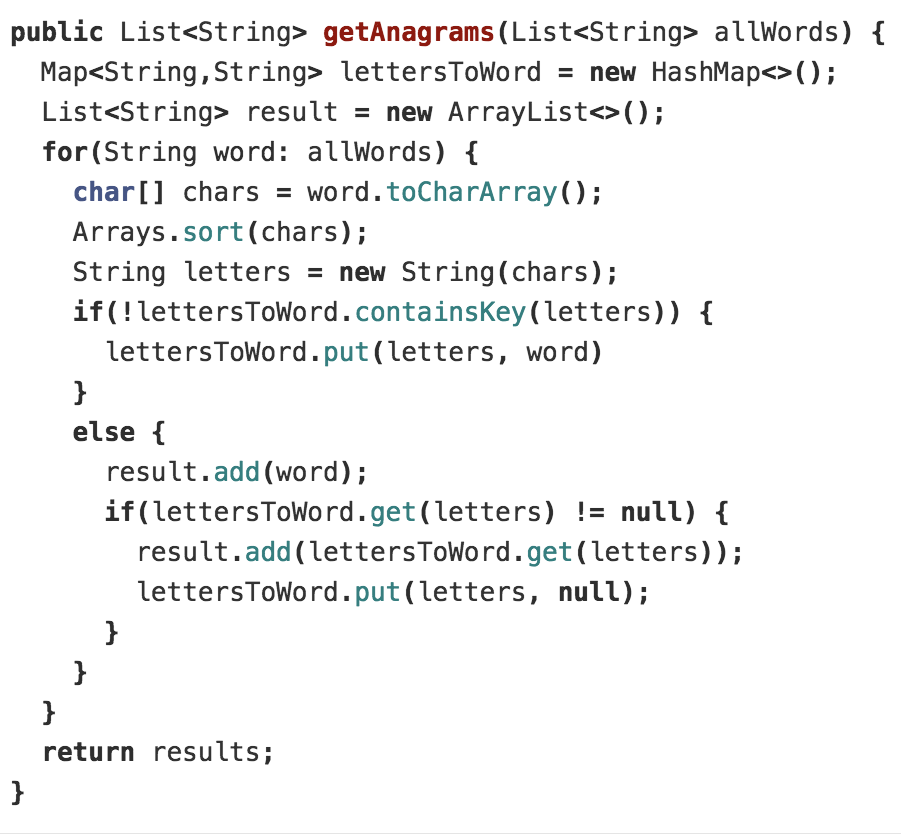

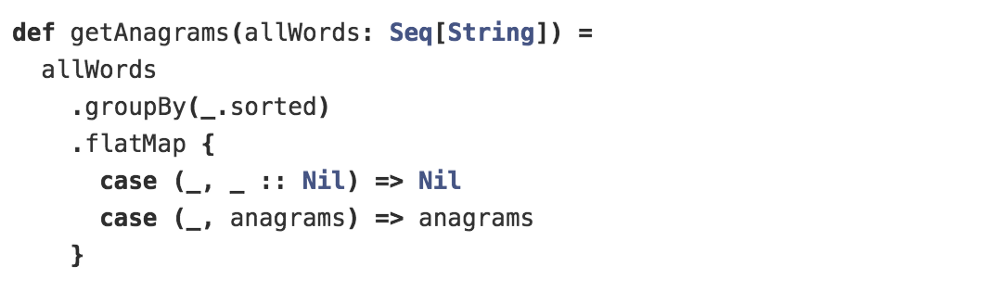

We usually favor solutions employing imperative algorithms with optimal data structures. For example the recommended solution to find all the anagrams in a list of String would be along the lines of:

Bonus points if the candidate uses a null pointer as a special flag to avoid storing the intermediate list of anagrams and optimize the storage complexity! While a developer thinking more in a functional way would probably favor a solution like:

Which has exactly the same runtime complexity by the way 🙂

Which has exactly the same runtime complexity by the way 🙂

But yes most developers will find that solution suspicious, especially regarding its “performance”. Perhaps because it looks too high level and good developers know so much about the data structure internals that they want to stay “in control” of what really happens.

So introducing functional programming this way is usually difficult.

Introducing the JVM at Criteo

In addition to being a .NET shop, Criteo also historically used SQL Server as data storage extensively. But with the exponential growth of the company it quickly became impossible to store our analytics data on a SQL Server cluster, even a giant one, and even with sharding!

So someone smart started to experiment with Hadoop and we finally got an Hadoop cluster. And with that, thousands of linux servers and JVMs!

So someone smart started to experiment with Hadoop and we finally got an Hadoop cluster. And with that, thousands of linux servers and JVMs!

Initially all the code we wrote was just plain Java map reduce jobs, but quickly we started to use Cascading to abstract away much of the complexity of Hadoop, and then Scalding to abstract away much of the complexity of Cascading 🙂

First Scala code base at Criteo appeared! And doing a flatMap on terabytes of data is so cool that everyone loved it!

The ScaVa area

With great power comes great responsibility. And as more people started using the giant Hadoop cluster to process and produce tons of data, the teams in charge needed to produce more tooling to keep it at a high quality level.

Schedulers, execution platforms, SQL parsers, you name it, Scala became the natural choice for these projects. The data engineering teams were also spending a lot of time working on big data jobs using the usual suspects of Spark and Scalding and we quickly learned that using Scala in a big data ecosystem and using Scala for application development is a different beast. Starting a new non big data Scala project from scratch turned out to be intimidating with the vast choice of styles and libraries available to the budding Scala dev.

At this time, we were mostly writing a mix of Java and Scala the we called “ScaVa”. It was not uncommon to see Java code appearing in Scala project because “it was simpler for me to do it this way”. Or to benchmark Java collections against the Scala one to be sure about the final performance of the application.

At this time, we were mostly writing a mix of Java and Scala the we called “ScaVa”. It was not uncommon to see Java code appearing in Scala project because “it was simpler for me to do it this way”. Or to benchmark Java collections against the Scala one to be sure about the final performance of the application.

Our development stack solidified around the Twitter Scala stack, mostly because we shared the same set of concerns as Twitter, especially around the data volume and performance. These libraries (Finagle, Scalding, Algebird) ended up being very important for Criteo by providing the critical features we needed like metrics and tracing across a complex software architecture. But they were also not very Scalaish, in the sense that they were not sharing some of our growing concerns around being Pure, typeful or focused on Functional Programming patterns.

Growing a new generation of FP developers

This necessary first step of experimenting with Scala and hybrid imperative/FP development style allowed us to improve the FP culture at Criteo: more workshops, more people going to Scala conferences, more focus on the safety and robustness of our programs.

Eventually Criteo started to became a Scala shop too, and with that started to hire more and more developers interested by Scala and Functional programming. Not only Scala then, also people with different background coming from OCaml, F# or even Haskell joined and helped us to improve our Functional Programming practices with Scala.

We started integrating more advanced Scala libraries and today most of our Scala projects are built around projects from the Typelevel community like cats, fs2, doobie, algebra, etc.

My tools do not work anymore

However a number of challenges make it difficult to onboard developers to a more advanced FP style. The main one is that switching from an imperative programming model to a functional programming model is not only a jump in the way of thinking, it also leaves a lot of tools on the side of the road.

However a number of challenges make it difficult to onboard developers to a more advanced FP style. The main one is that switching from an imperative programming model to a functional programming model is not only a jump in the way of thinking, it also leaves a lot of tools on the side of the road.

The most important one is the IDE. Developers love their IDE — it does so much for them! The problem is that good IDEs have been designed for imperative programmers. For example the step-by-step debugger is the usual way developers try to fix their programs. But for lazily evaluated FP programs it does not work so well. I have seen many developers trying to debug a Scala program this way, stuck on a sort operation that was not done “in place” like most expect.

IDEs also do not support very advanced Scala feature like macros and sophisticated types, making some people reluctant to include some otherwise very useful libraries, so as to avoid seeing their IDE completely lost, not able to correctly type an expression, and outrageously reporting a false error in their perfect code!

Franken-Scala for Big Data

Scala for big data and data science has very little in common with the rest of the Scala ecosystem. They do share the same base language but the style and the concerns are very different.

Scala for big data and data science has very little in common with the rest of the Scala ecosystem. They do share the same base language but the style and the concerns are very different.

For example, let’s take JSON parsing: while a classic Scala developer will probably favor code robustness and expressivity in JSON parsing and choose a library like Circe, when writing a big data job dealing with billions of one-level depth JSON documents you will probably favor performance and memory consumption and choose the fastest JSON parser for (probably) Java you can find!

In a general way, when I work on a Scala program I encode as many constraints as I can into my type, and when my program compiles it usually works or at least I am very close to getting it right! However when working on a Scala Big Data job, making it compile is usually easy but it can take days to make it run properly on a Hadoop cluster!

This is because there is so much accidental complexity in using Scala (or Java) when developing a big data job on Hadoop. From all the magic around serializing to the network, to classpath issues, to file formats, etc.

This turns out to be very comprehensible: most of the guarantees brought by Scala are destroyed at I/O boundaries. A Big Data job has a large, complex surface area in contact with the outside world, and this complexity dominates the problem.

Haunted by old patterns ghosts

Most developers coming to Scala don’t arrive wide eyed and innocent. They have usually years of programming practice behind them, and if they spent most of their career working in an imperative programming environment then they have learned a lot of patterns and “best practices” they now have to somewhat forget.

Most developers coming to Scala don’t arrive wide eyed and innocent. They have usually years of programming practice behind them, and if they spent most of their career working in an imperative programming environment then they have learned a lot of patterns and “best practices” they now have to somewhat forget.

The classic question from a Java developer coming to Scala is: “ok so where is the framework for dependency injection?”. DI has become so important in Java that entire tools and solutions dedicated to the problem appeared. Not to say that it is not a problem in Scala,but usually simple solutions built around implicit contexts do the job and it is very rare and not very idiomatic to bring a DI framework to your Scala codebase — especially if it brings in mutability everywhere!

We also often have the same kind of discussion around the use of (especially early) return that is often favored in Java and imperative programming but being a jump in the control flow, not idiomatic in Scala.

Desperately seeking the new right patterns

Eventually a newcomer in Scala and FP must forget a lot of the practices they have learned over time and so they seek the new “right” patterns to apply to their shiny new Scala problem.

Eventually a newcomer in Scala and FP must forget a lot of the practices they have learned over time and so they seek the new “right” patterns to apply to their shiny new Scala problem.

Again there is a trap here as only experience can distinguish what is useful and what is not and which pattern to apply in which situation. Unfortunately, a lot of blog posts promising a lot of extraordinary new programming practices are quite appealing to fresh new eager to learn Scala programmers!

Scala being a very rich programming language with many experimental features also does not help. It takes a lot of time for a developer to know what to use, how to use it, and what is the proper style to adopt.

Fortunately it is easier to do in a team and to build a coherent style in a company as more and more Scala projects are born and die off.

Criteo is a real Scala shop!

Despite all the challenges of introducing functional code in a large software company as Criteo, we can say that today it is a real success and that Criteo is really a Scala shop. Scala is widely used for a large typology of projects, including the most demanding ones like serving more than 10 billion of on demand generated product pictures which is done by a Scala service distributed around the globe across all of our datacenters.

We also try to contribute back to the Scala community and to open source most of our Scala tooling like for example, Cuttle our job executer and scheduler, vizsql to parse and manipulate SQL queries or Babar to profile distributed applications running on Hadoop.

-

CriteoLabs

Our lovely Community Manager / Event Manager is updating you about what's happening at Criteo Labs.

See DevOps Engineer roles